At o1Labs, we obsess about developers. Our mission is to catalyze a new generation of applications powered by zero knowledge cryptography. As we’ve pursued that mission over the last 5 years, we’ve learned over and over again that to unlock the power of this technology, we must be laser-focused on the developer experience.

Zero knowledge is magical, but it’s also complex. Every friction point in your developer journey, from discovery and learning to trying ‘Hello World’ and building your first application, increases the churn rate and slows down the industry from reaching its potential. It’s the reason we chose TypeScript as our language of choice for zk — to make it accessible to the 10+ million web developers out there who could benefit from the privacy and verifiability that zk can add to many applications.

We feel so strongly about the developer experience that it’s a core pillar of our product strategy and it impacts almost every aspect of our work.

As a developer you know that the edit-compile-debug cycle is crucial to your ‘flow’. The fewer delays and the less waiting you experience, the more you can focus on your core business logic and the better you’ll solve problems and create delightful experiences for your users.

As we worked with zkApp developers in the Mina Protocol Discord and at various hackathons, we could see that the core workflow took too long. While taking application logic and turning it into a provable circuit is complex, we are determined to make it as fast as possible. We observed developers and dog-fooded our own framework and noticed that the majority of changes to an application were actually not in the core provable part of the logic, but rather the surrounding logic. Our toolchain lacked the sophistication to detect these differences, leading to unnecessary rebuilding of provable circuit generation and verification keys.

Implementation

So, we added a long-awaited feature: caching of prover keys. Prover keys are the cryptographic material that enable you to create a zk proof from provable code. They are a counterpart to verifier keys, which enable verification of the proof. Both of them are generated when a zkApp developer calls `contract.compile()` — and this is usually more expensive than creating a single proof.

When looking into the implementation, we found that Pickles, the recursion layer that o1js is built on, already has built-in support for caching. To enable caching, Pickles not only creates a prover key, but also a hash of the constraint system which is much quicker to compute. We now write both the prover key and the hash to disk. Then, on the next compilation, a cache hit is determined by recomputing the hash and checking that it matches the stored result.

When we first tried out the new caching feature, the result was disappointing: Compiling actually took much longer than without caching. What was happening?

Turns out that Pickles behaved suboptimally in that it always regenerated the verifier keys after loading prover keys from cache — even if verifier keys were cached themselves. Verifier key creation itself is pretty quick. But, it so happens that a lot of shared precomputation is required for generating prover keys, verifier keys, and proving. All of these operations depend on the existence of long lists of elliptic curve points called the SRS and Lagrange basis. Generating these points used to be almost half of the compilation time! Now, every time we loaded prover keys, we created SRS and Lagrange bases just to redundantly compute verifier keys. What made this even worse is that Lagrange bases were now created in a single thread, whereas previously during prover key generation they were created with the full number of threads.

We ended up fixing all of this — no extra verifier keys are generated, and both SRS and Lagrange bases are now cached as well. If they aren’t cached yet, they are always created in parallel (the SRS previously wasn’t). Tested on a simple zkApp in a typical development environment, the net effect was an 85% reduction in compilation time after caching (20s -> 3s).

— Test Example

We performed this series of steps to measure the performance enhancement observed by prover key caching. We started with one of the zkApp examples provided in our repo.

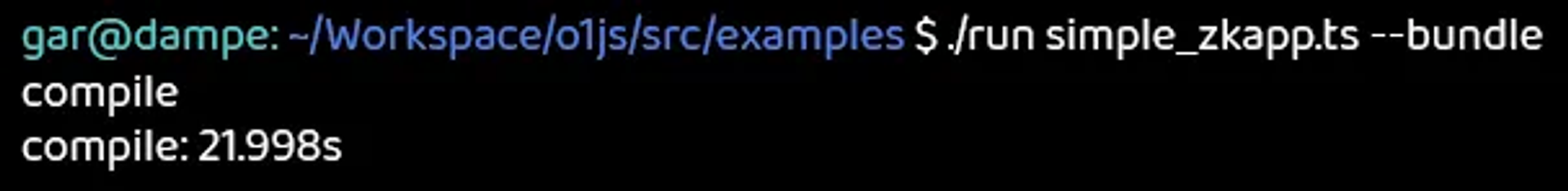

First, we compiled with an older version of o1js (without prover key caching enabled):

About 22 seconds. Not bad, but every time we recompile this zkApp, it will take roughly the same amount of time.

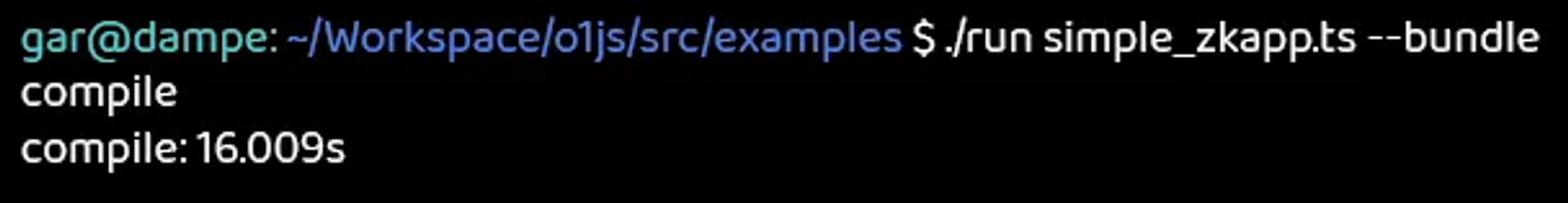

Now, we updated to the latest version of o1js and compiled the zkApp again.

The first thing we noticed is some general performance improvements. Nice! Our baseline is now 16 seconds.

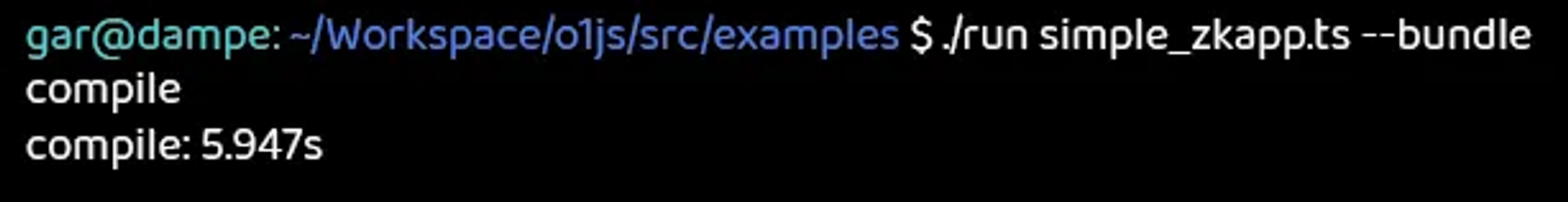

Now our prover keys have been cached, so compile time should be faster.

Wow, just under 6 seconds! That’s a 63% improvement!

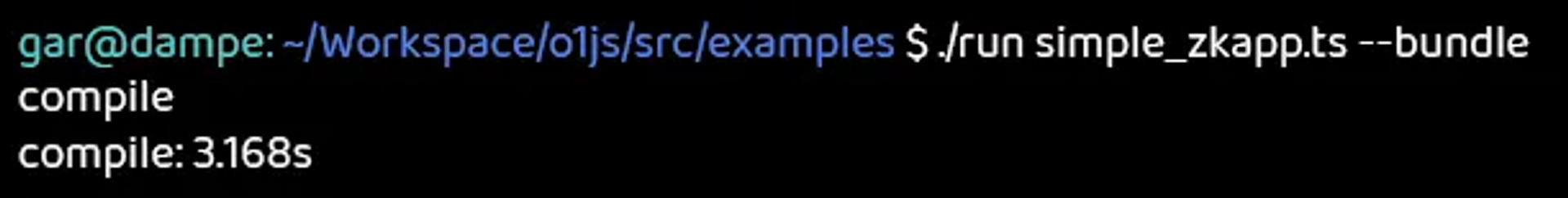

Even better, on subsequent compilations, the benefits of prover key caching compound and in our final test, compile time is just over 3 seconds. That’s an 80% improvement in compile time for this zkApp.

In summary, by focusing on the developer experience and the core workflow that zkApp developers repeat 100s of times a day, we were able to identify the performance bottlenecks that really matter and deliver a faster edit/compile/debug cycle. OK, back to the code…

And if you’re interested in developing with o1js, get started here!